Shijie Wang

Ph.D. Candidate

Department of Computing (COMP)

The Hong Kong Polytechnic University (PolyU)

Supervisor: Dr. Fan Wenqi

Co-supervisor: Prof. Li Qing

Email: shijie.wang(at)connect.polyu.hk

Office: PQ609, Kowloon, Hong Kong SAR

Google Scholar GitHub Linkedin

The Hong Kong Polytechnic University (PolyU)

Supervisor: Dr. Fan Wenqi

Co-supervisor: Prof. Li Qing

Email: shijie.wang(at)connect.polyu.hk

Office: PQ609, Kowloon, Hong Kong SAR

Google Scholar GitHub Linkedin

Bio

I am a fourth-year PhD Candidate in the department of Computing of The Hong Kong Polytechnic University (PolyU), advised by Assistant Professor Fan Wenqi and Prof. Li Qing. My current research focuses on Large Language Models (LLMs), Retrieval-Augmented Generation (RAG) and Graph Neural Networks (GNNs) as well as their applications in recommender systems (RecSys). I am currently a visiting scholar at the Institute of Data Science (IDS), National University of Singapore (NUS) , under the supervision of Prof. Ng See-Kiong .

I obtained my BSc degree in Information and Computing Science from Xi’an Jiaotong-Liverpool University in China and the University of Liverpool in the UK in July, 2022.

News

- Oct 2025: our paper “Towards Next-Generation Recommender Systems: A Benchmark for Personalized Recommendation Assistant with LLMs“ is accepted by WSDM 2026🎉.

- Oct 2025: our paper “Continuous-time Discrete-space Diffusion Model for Recommendation“ is accepted by WSDM 2026🎉.

- Aug 2025: our paper “Score-based Generative Diffusion Models for Social Recommendations“ is accepted by IEEE Transactions on Knowledge and Data Engineering (TKDE)🎉.

- May 2025: our paper “Knowledge Graph Retrieval-Augmented Generation for LLM-based Recommendation” is accepted by ACL main 2025🎉.

- Apr 2025: our paper “Tree-of-AdEditor: Heuristic Tree Reasoning for Automated Video Advertisement Editing with Large Language Model” got accepted by IJCAI 2025🎉.

- Mar 2025: our survey on “Graph Machine Learning in the Era of Large Language Models (LLMs)” is accepted by Transactions on Intelligent Systems and Technology (TIST)🎉.

- Jan 2025: our new preprint “Computational Protein Science in the Era of Large Language Models (LLMs)“ is online.

- Dec 2024: our tutorial on “Towards Retrieval-Augmented Large Language Models: Data Management and System Design” is accepted by ICDE 2025🎉.

- Sep 2024: our paper “Multi-agent Attacks for Black-box Social Recommendations” is accepted by Transactions on Information Systems (TOIS)🎉.

- May 2024: our tutorial and survey paper “A Survey on RAG Meets LLMs: Towards Retrieval-Augmented Large Language Models” got accepted by KDD 2024🎉.

- May 2024: our paper “CheatAgent: Attacking LLM-Empowered Recommender Systems via LLM Agent” is accepted by KDD 2024🎉.

Selected Publications

For a full list of publications, see Research. * indicates equal contribution.

Chengyi Liu, Xiao Chen

Shijie Wang,

Wenqi Fan, Qing Li

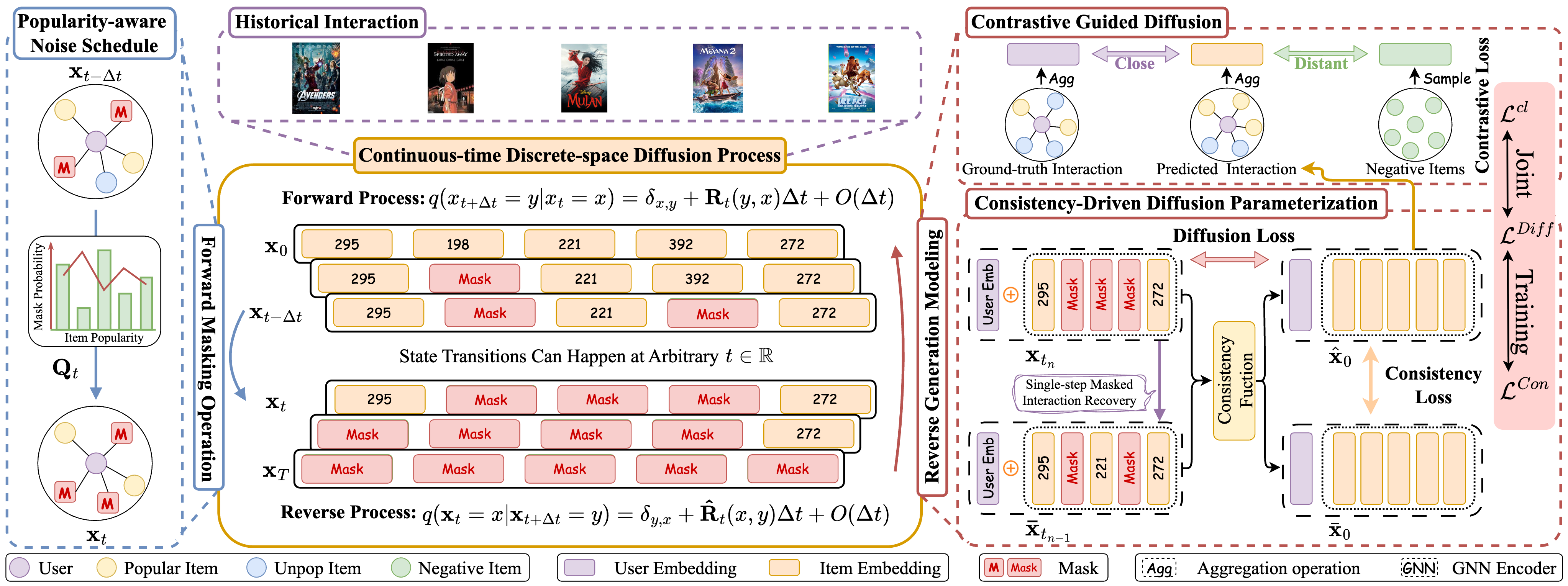

Continuous-time Discrete-space Diffusion Model for Recommendation

Continuous-time Discrete-space Diffusion Model for Recommendation

In

WSDM,

2026.

In the era of information explosion, Recommender Systems (RS) are essential for alleviating information overload and providing personalized user experiences. Recent advances in diffusion-based generative recommenders have shown promise in capturing the dynamic nature of user preferences. These approaches explore a broader range of user interests by progressively perturbing the distribution of user-item interactions and recovering potential preferences from noise, enabling nuanced behavioral understanding. However, existing diffusion-based approaches predominantly operate in continuous space through encoded graph-based historical interactions, which may compromise potential information loss and suffer from computational inefficiency. As such, we propose CDRec, a novel Continuous-time Discrete-space Diffusion Recommendation framework, which models user behavior patterns through discrete diffusion on historical interactions over continuous time.

Shijie Wang,

Wenqi Fan, Yue Feng, Shanru Lin, Xinyu Ma, Shuaiqiang Wang, Dawei Yin

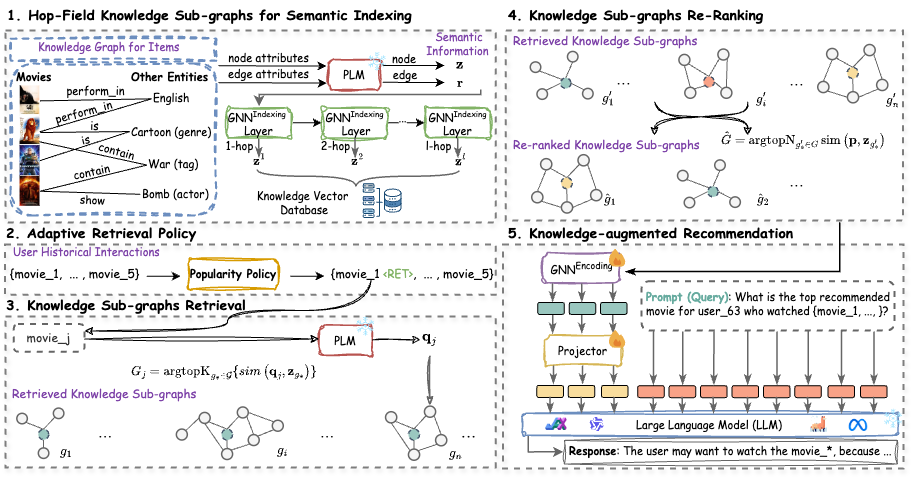

Knowledge Graph Retrieval-Augmented Generation for LLM-based Recommendation

Knowledge Graph Retrieval-Augmented Generation for LLM-based Recommendation

In

ACL main,

2025.

Recently, Retrieval-Augmented Generation (RAG) has garnered significant attention for addressing these limitations by leveraging external knowledge sources to enhance the understanding and generation of LLMs. However, vanilla RAG methods often introduce noise and neglect structural relationships in knowledge, limiting their effectiveness in LLM-based recommendations. To address these limitations, we propose to retrieve high-quality and up-to-date structure information from the knowledge graph (KG) to augment recommendations. Specifically, our approach develops a retrieval-augmented framework, termed K-RagRec, that facilitates the recommendation generation process by incorporating structure information from the external KG. Extensive experiments have been conducted to demonstrate the effectiveness of our proposed method.

Shijie Wang,

Jiani Huang, Wenqi Fan, Zhikai Chen, Yu Song, Wenzhuo Tang, Haitao Mao, Hui Liu, Xiaorui Liu, Dawei Yin, Qing Li

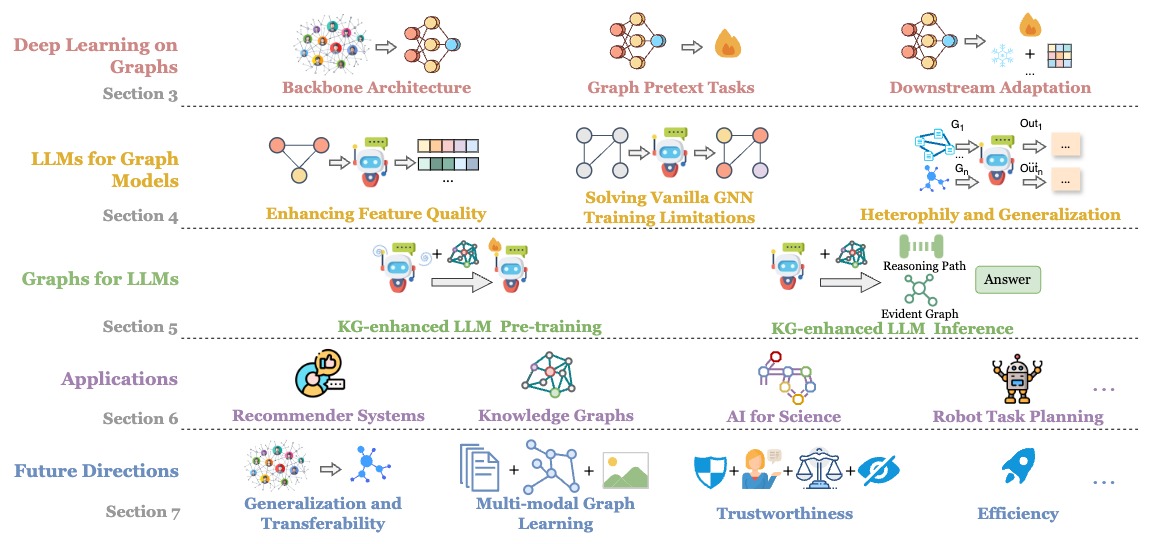

Graph Machine Learning in the Era of Large Language Models (LLMs)

Graph Machine Learning in the Era of Large Language Models (LLMs)

In

Transactions on Intelligent Systems and Technology (TIST),

2025.

In this survey, we first review the recent developments in Graph ML. We then explore how LLMs can be utilized to enhance the quality of graph features, alleviate the reliance on labeled data, and address challenges such as graph heterogeneity and out-of-distribution (OOD) generalization. Afterward, we delve into how graphs can enhance LLMs, highlighting their abilities to enhance LLM pre-training and inference. Furthermore, we investigate various applications and discuss the potential future directions in this promising field.

Jiani Huang,

Shijie Wang,

Liang-bo Ning, Wenqi Fan, Shuaiqiang Wang, Dawei Yin, Qing Li

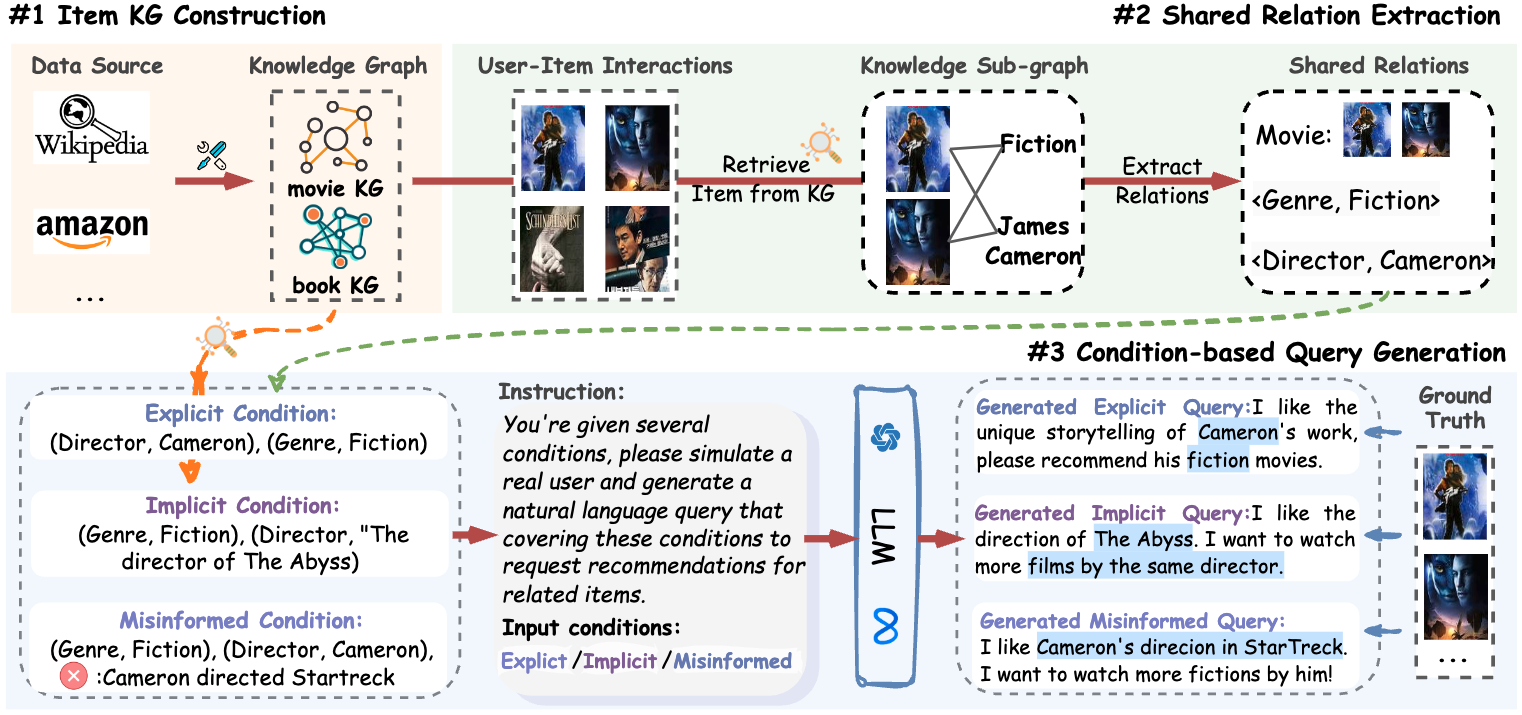

Towards Next-Generation Recommender Systems: A Benchmark for Personalized Recommendation Assistant with LLMs

Towards Next-Generation Recommender Systems: A Benchmark for Personalized Recommendation Assistant with LLMs

In

WSDM,

2026.

Recently, the advancement of large language models (LLMs) has revolutionized the foundational architecture of RecSys, driving their evolution into more intelligent and interactive personalized recommendation assistants. However, most existing studies rely on fixed task-specific prompt templates to generate recommendations and evaluate the performance of personalized assistants, which limits the comprehensive assessments of their capabilities. This is because commonly used datasets lack high-quality textual user queries that reflect real-world recommendation scenarios, making them unsuitable for evaluating LLM-based personalized recommendation assistants. To address this gap, we introduce RecBench+, a new dataset benchmark designed to access LLMs' ability to handle intricate user recommendation needs in the era of LLMs. RecBench+ encompasses a diverse set of queries that span both hard conditions and soft preferences, with varying difficulty levels. We evaluated commonly used LLMs on RecBench+ and uncovered below findings: 1) LLMs demonstrate preliminary abilities to act as recommendation assistants, 2) LLMs are better at handling queries with explicitly stated conditions, while facing challenges with queries that require reasoning or contain misleading information.

Lin Wang,

Shijie Wang,

Sirui Huang, Qing Li

Simplifying Graph Kernels for Efficient

Simplifying Graph Kernels for Efficient

In

WISE,

2025.

While kernel methods and Graph Neural Networks offer complementary strengths, integrating the two has posed challenges in efficiency and scalability. The Graph Neural Tangent Kernel provides a theoretical bridge by interpreting GNNs through the lens of neural tangent kernels. However, its reliance on deep, stacked layers introduces repeated computations that hinder performance. In this work, we introduce a new perspective by designing the simplified graph kernel, which replaces deep layer stacking with a streamlined K-step message aggregation process. This formulation avoids iterative layer-wise propagation altogether, leading to a more concise and computationally efficient framework without sacrificing the expressive power needed for graph tasks. Beyond this simplification, we propose another Simplified Graph Kernel, which draws from Gaussian Process theory to model infinite-width GNNs.

Wenqi Fan, Yi Zhou,

Shijie Wang,

Yuyao Yan, Hui Liu, Qian Zhao, Le Song, Qing Li

Computational Protein Science in the Era of Large Language Models (LLMs)

Computational Protein Science in the Era of Large Language Models (LLMs)

In

Preprint,

2025.

First, we summarize existing pLMs into categories based on their mastered protein knowledge, i.e., underlying sequence patterns, explicit structural and functional information, and external scientific languages. Second, we introduce the utilization and adaptation of pLMs, highlighting their remarkable achievements in promoting protein structure prediction, protein function prediction, and protein design studies. Then, we describe the practical application of pLMs in antibody design, enzyme design, and drug discovery. Finally, we specifically discuss the promising future directions in this fast-growing field.

Chengyi Liu, Jiahao Zhang,

Shijie Wang,

Wenqi Fan, Qing Li

Score-based Generative Diffusion Models for Social Recommendations

Score-based Generative Diffusion Models for Social Recommendations

In

TKDE,

2025.

In this paper, we tackle the low social homophily challenge from an innovative generative perspective, directly generating optimal user social representations that maximize consistency with collaborative signals. Specifically, we propose the Score-based Generative Model for Social Recommendation (SGSR), which effectively adapts the Stochastic Differential Equation (SDE)-based diffusion models for social recommendations. To better fit the recommendation context, SGSR employs a joint curriculum training strategy to mitigate challenges related to missing supervision signals and leverages self-supervised learning techniques to align knowledge across social and collaborative domains. Extensive experiments on real-world datasets demonstrate the effectiveness of our approach in filtering redundant social information and improving recommendation performance.

Shijie Wang,

Wenqi Fan, Xiao-yong Wei, Xiaowei Mei, Shanru Lin, Qing Li

Multi-agent Attacks for Black-box Social Recommendations

Multi-agent Attacks for Black-box Social Recommendations

In

Transactions on Information Systems (TOIS),

2024.

To perform untargeted attacks on social recommender systems, attackers can construct malicious social relationships for fake users to enhance the attack performance. However, the coordination of social relations and item profiles is challenging for attacking black-box social recommendations. To address this limitation, we first conduct several preliminary studies to demonstrate the effectiveness of cross-community connections and cold-start items in degrading recommendations performance. Specifically, we propose a novel framework Multiattack based on multi-agent reinforcement learning to coordinate the generation of cold-start item profiles and cross-community social relations for conducting untargeted attacks on black-box social recommendations. Comprehensive experiments on various real-world datasets demonstrate the effectiveness of our proposed attacking framework under the black-box setting.

Liangbo Ning*,

Shijie Wang*,

Wenqi Fan, Qing Li, Xu Xin, Hao Chen, Feiran Huang

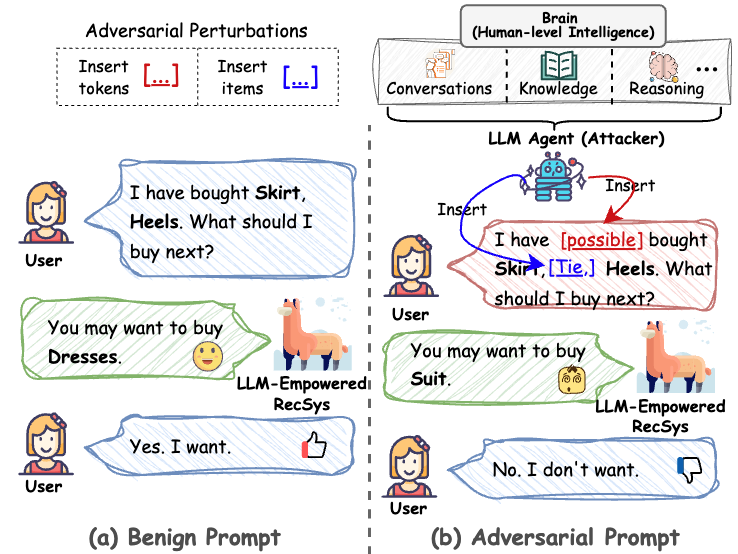

CheatAgent: Attacking LLM-Empowered Recommender Systems via LLM Agent

CheatAgent: Attacking LLM-Empowered Recommender Systems via LLM Agent

In

KDD,

2024.

In this paper, we propose a novel attack framework called CheatAgent by harnessing the human-like capabilities of LLMs, where an LLM-based agent is developed to attack LLM-Empowered RecSys. Specifically, our method first identifies the insertion position for maximum impact with minimal input modification. After that, the LLM agent is designed to generate adversarial perturbations to insert at target positions. To further improve the quality of generated perturbations, we utilize the prompt tuning technique to improve attacking strategies via feedback from the victim RecSys iteratively. Extensive experiments across three real-world datasets demonstrate the effectiveness of our proposed attacking method.

Yujuan Ding, Wenqi Fan, Liangbo Ning,

Shijie Wang,

Hengyun Li, Dawei Yin, Tat-Seng Chua, Qing Li

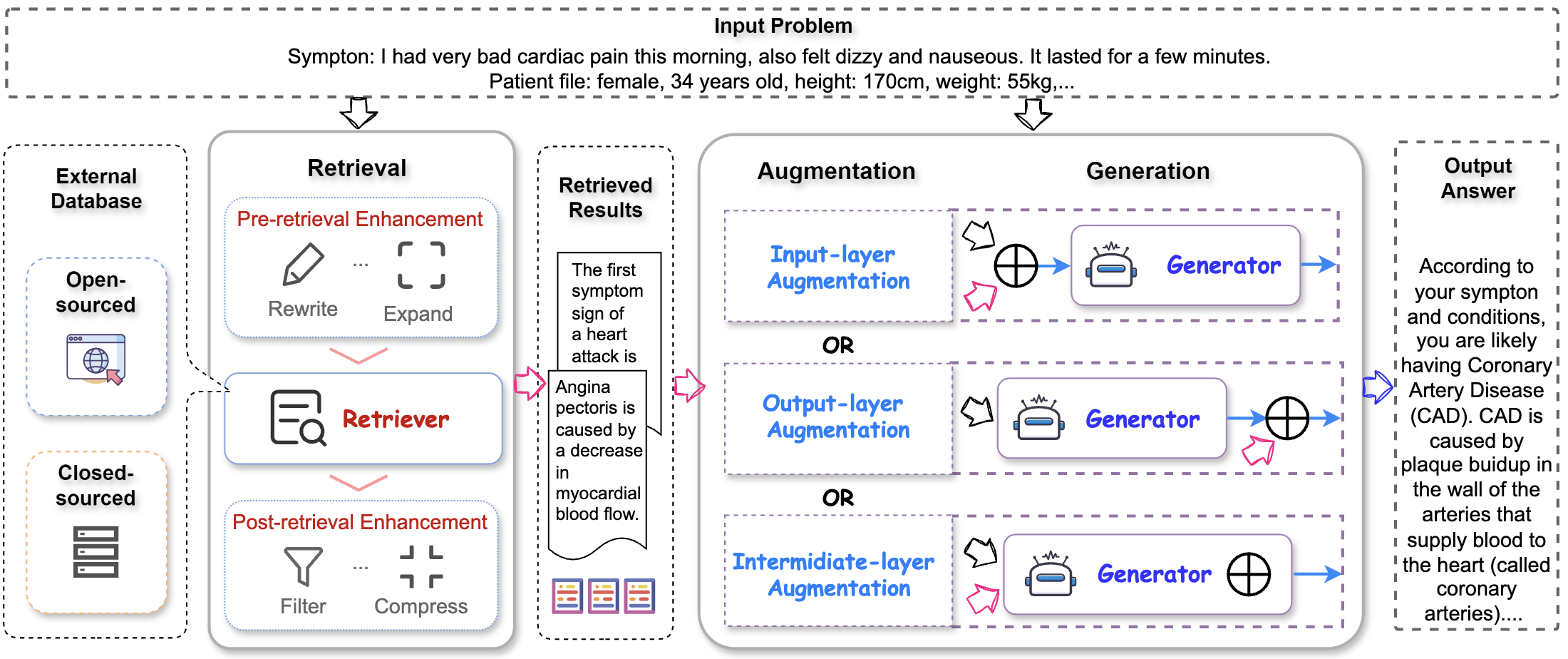

A Survey on RAG Meets LLMs: Towards Retrieval-Augmented Large Language Models

A Survey on RAG Meets LLMs: Towards Retrieval-Augmented Large Language Models

In

KDD,

2024.

In this survey, we comprehensively review existing research studies in retrieval-augmented large language models (RA-LLMs), covering three primary technical perspectives: architectures, training strategies, and applications. As the preliminary knowledge, we briefly introduce the foundations and recent advances of LLMs. Then, to illustrate the practical significance of RAG for LLMs, we categorize mainstream relevant work by application areas, detailing specifically the challenges of each and the corresponding capabilities of RA-LLMs. Finally, to deliver deeper insights, we discuss current limitations and several promising directions for future research.

Jiahao Zhang, Lin Wang,

Shijie Wang,

Wenqi Fan

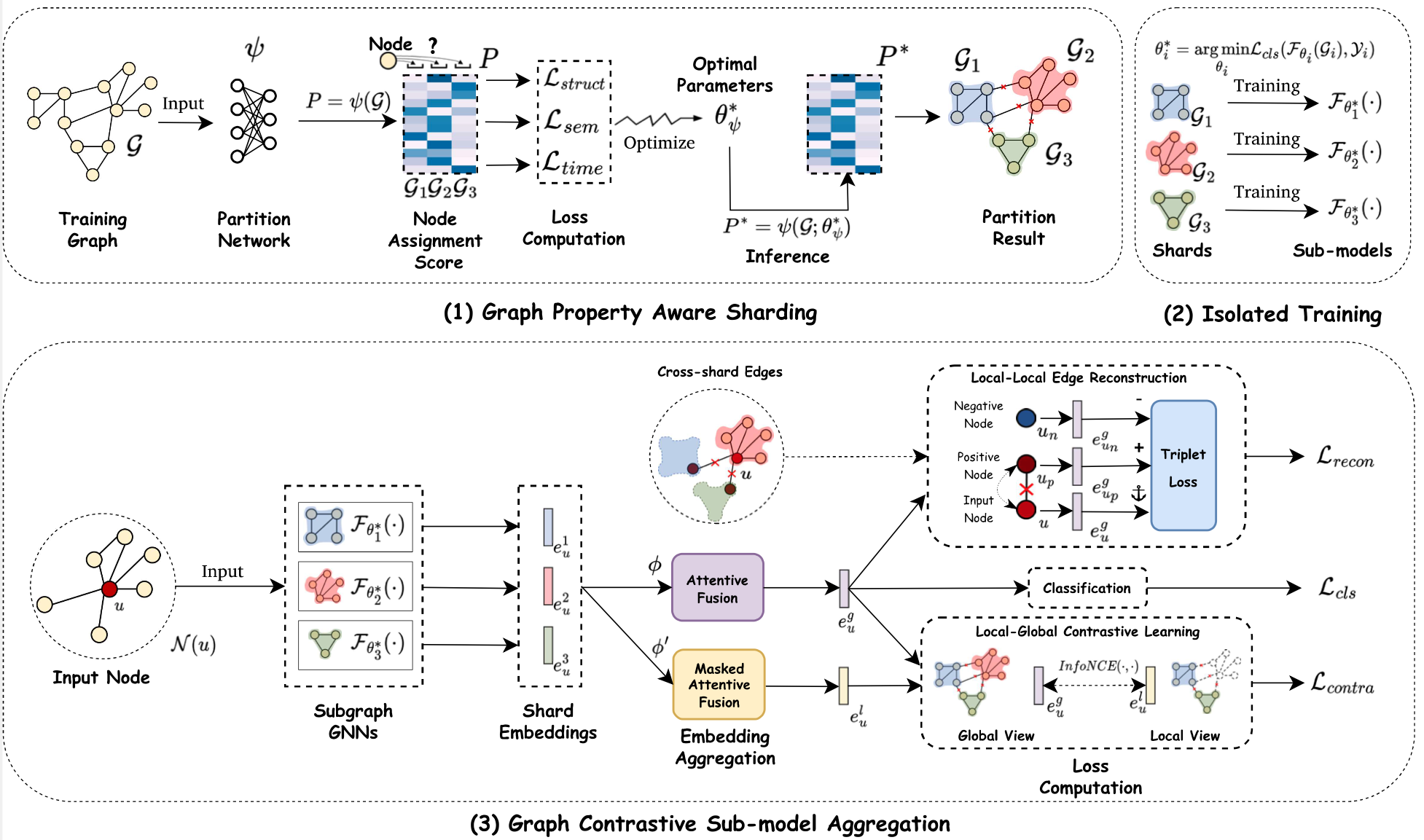

Graph Unlearning with Efficient Partial Retraining

Graph Unlearning with Efficient Partial Retraining

In

WWW PhD Symposium,

2024.

Graph Neural Networks (GNNs) have achieved remarkable success in various real-world applications. However, GNNs may be trained on undesirable graph data, which can degrade their performance and reliability. To enable trained GNNs to efficiently unlearn unwanted data, a desirable solution is retraining-based graph unlearning, which partitions the training graph into subgraphs and trains sub-models on them, allowing fast unlearning through partial retraining. However, the graph partition process causes information loss in the training graph, resulting in the low model utility of sub-GNN models. In this paper, we propose GraphRevoker, a novel graph unlearning framework that better maintains the model utility of unlearnable GNNs.

Shijie Wang,

Shangbo Wang

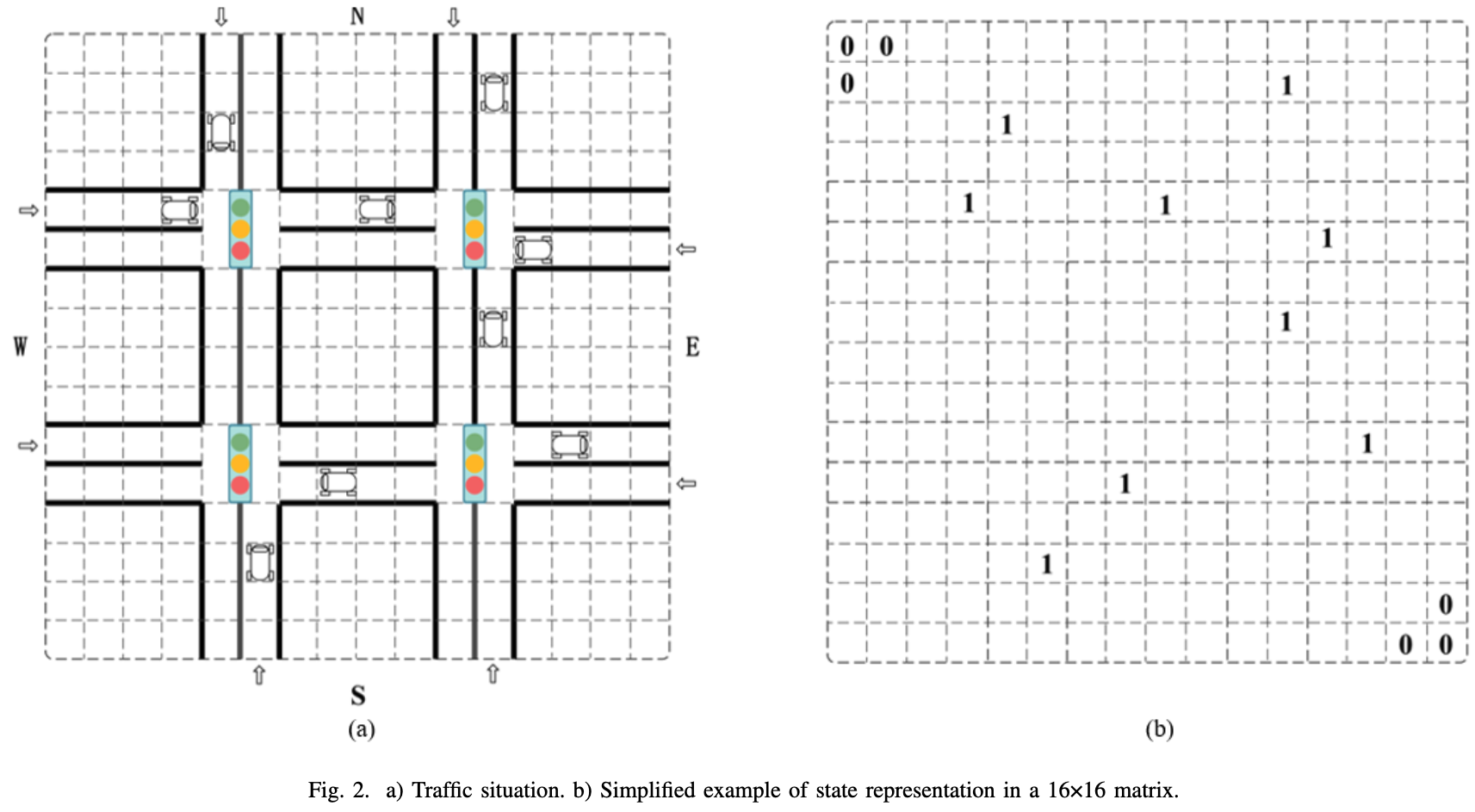

A novel Multi-Agent Deep RL Approach for Traffic Signal Control

A novel Multi-Agent Deep RL Approach for Traffic Signal Control

In

PerCom Workshop,

2023.

As travel demand increases and urban traffic condition becomes more complicated, applying multi-agent deep reinforcement learning (MARL) to traffic signal control becomes one of the hot topics. The rise of Reinforcement Learning (RL) has opened up opportunities for solving Adaptive Traffic Signal Control (ATSC) in complex urban traffic networks, and deep neural networks have further enhanced their ability to handle complex data. Traditional research in traffic signal control is based on the centralized Reinforcement Learning technique. However, in a large-scale road network, centralized RL is infeasible because of an exponential growth of joint state-action space. In this paper, we propose a Friend-Deep Q-network (Friend-DQN) approach for multiple traffic signal control in urban networks, which is based on an agent-cooperation scheme. In particular, the cooperation between multiple agents can reduce the state-action space and thus speed up the convergence.

Cite Simplifying Graph Kernels for Efficient

@article{wang2025simplifying,

title={Simplifying Graph Kernels for Efficient},

author={Wang, Lin and Wang, Shijie and Huang, Sirui and Li, Qing},

journal={arXiv preprint arXiv:2507.03560},

year={2025}

}

Cite Continuous-time Discrete-space Diffusion Model for Recommendation

@article{liu2025continuous,

title={Continuous-time Discrete-space Diffusion Model for Recommendation},

author={Liu, Chengyi and Chen, Xiao and Wang, Shijie and Fan, Wenqi and Li, Qing},

journal={arXiv preprint arXiv:2511.12114},

year={2025}

}

Cite Towards Next-Generation Recommender Systems: A Benchmark for Personalized Recommendation Assistant with LLMs

@article{huang2025towards,

title={Towards Next-Generation Recommender Systems: A Benchmark for Personalized Recommendation Assistant with LLMs},

author={Huang, Jiani and Wang, Shijie and Ning, Liang-bo and Fan, Wenqi and Wang, Shuaiqiang and Yin, Dawei and Li, Qing},

journal={arXiv preprint arXiv:2503.09382},

year={2025}

}

Cite Computational Protein Science in the Era of Large Language Models (LLMs)

@article{fan2025computational,

title={Computational Protein Science in the Era of Large Language Models (LLMs)},

author={Fan, Wenqi and Zhou, Yi and Wang, Shijie and Yan, Yuyao and Liu, Hui and Zhao, Qian and Song, Le and Li, Qing},

journal={arXiv preprint arXiv:2501.10282},

year={2025}

}

Cite Knowledge Graph Retrieval-Augmented Generation for LLM-based Recommendation

@article{wang2025knowledge,

title={Knowledge Graph Retrieval-Augmented Generation for LLM-based Recommendation},

author={Wang, Shijie and Fan, Wenqi and Feng, Yue and Ma, Xinyu and Wang, Shuaiqiang and Yin, Dawei},

journal={arXiv preprint arXiv:2501.02226},

year={2025}

}

Cite Score-based Generative Diffusion Models for Social Recommendations

@article{liu2024score,

title={Score-based Generative Diffusion Models for Social Recommendations},

author={Liu, Chengyi and Zhang, Jiahao and Wang, Shijie and Fan, Wenqi and Li, Qing},

journal={arXiv preprint arXiv:2412.15579},

year={2024}

}

Cite CheatAgent: Attacking LLM-Empowered Recommender Systems via LLM Agent

@inproceedings{ning2024cheatagent,

title={CheatAgent: Attacking LLM-Empowered Recommender Systems via LLM Agent},

author={Ning, Liang-bo and Wang, Shijie and Fan, Wenqi and Li, Qing and Xu, Xin and Chen, Hao and Huang, Feiran},

booktitle={Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining},

pages={2284--2295},

year={2024}

}

Cite A Survey on RAG Meets LLMs: Towards Retrieval-Augmented Large Language Models

@article{ding2024survey,

title={A Survey on RAG Meets LLMs: Towards Retrieval-Augmented Large Language Models},

author={Ding, Yujuan and Fan, Wenqi and Ning, Liangbo and Wang, Shijie and Li, Hengyun and Yin, Dawei and Chua, Tat-Seng and Li, Qing},

journal={arXiv preprint arXiv:2405.06211},

year={2024}

}

Cite Graph Machine Learning in the Era of Large Language Models (LLMs)

@article{fan2024graph,

title={Graph Machine Learning in the Era of Large Language Models (LLMs)},

author={Fan, Wenqi and Wang, Shijie and Huang, Jiani and Chen, Zhikai and Song, Yu and Tang, Wenzhuo and Mao, Haitao and Liu, Hui and Liu, Xiaorui and Yin, Dawei and others},

journal={arXiv preprint arXiv:2404.14928},

year={2024}

}

Cite Graph Unlearning with Efficient Partial Retraining

@article{zhang2024graph,

title={Graph Unlearning with Efficient Partial Retraining},

author={Zhang, Jiahao and Wang, Lin and Wang, Shijie and Fan, Wenqi},

journal={arXiv preprint arXiv:2403.07353},

year={2024}

}Cite Multi-agent Attacks for Black-box Social Recommendations

@article{wang2023multi,

title={Multi-agent Attacks for Black-box Social Recommendations},

author={Wang, Shijie and Fan, Wenqi and Wei, Xiao-yong and Mei, Xiaowei and Lin, Shanru and Li, Qing},

journal={ACM Transactions on Information Systems},

year={2023},

publisher={ACM New York, NY}

}Cite A novel multi-agent deep RL approach for traffic signal control

@inproceedings{shijie2023novel,

title={A novel multi-agent deep RL approach for traffic signal control},

author={Shijie, Wang and Shangbo, Wang},

booktitle={2023 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops)},

pages={15--20},

year={2023},

organization={IEEE}

}